Like so many of you, I’ve been immersed in AI for the past couple of years trying to ferret out the big impacts this tech will have on the news and sports television industries.

By now Stream TV Insider readers know much about the latest in LLMs. But OpenAI’s release of ChatGPT 4.1 in April 2025 was a watershed that few seem to have noticed.

Context Windows

GPT 4.1’s ability to ingest 1 million tokens in a prompt – 8x more than the previous maximum – and its fine-tuned ability to ‘find a needle’ in that larger haystack has massive ramifications for both news and sports – and by extension for our society writ large. This dramatic increase in ability is nothing less than another step-change revolution which, in turn, is going to up-end our ability to skim-through videos – news, sports, archives, e-learning – the way we scan a table of contents.

Here's a true story that illustrates some next-level impacts of this context window revolution.

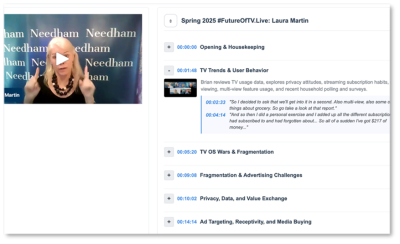

I host a free quarterly Zoom webinar called #FutureOfTV.Live. (Next one’s this Friday August 1 at 9am.) As a time-shift TV guy, I’ve always known that on-demand webinars that aren’t condensed, edited or clipped are tough to get through. It’s not the same when viewing it live. But take that file and process it with an LLM, and it becomes a source of priceless nuggets of wisdom perfect for highlights, re-purposed segments and visual insights.

Thus began my AI journey to automate this process for myself. I learned a lot more than I bargained for in the process.

Over the past year, I’ve vibe coded an application that used ChatGPT 4o – and then ChatGPT 4.1 – to automatically chapter, quote-pull, and display these webinars with clickable time-codes by simply uploading the file into my WebinarVault. (Image at right is an embedded portal from my access-protected resource library.)

Until May, when I implemented 4.1, I had to cut the videos to 90 minutes max. For reference, the $50M VC-and-Nvidia-backed TwelveLabs can only handle 1 hour of video in its Pegasus generative LLM model. Let that sink in. One hour. At a time.

Let’s jump to two big steps.

Step one. Context Switching.

I decided to experiment with news content. It was great for webinars, but how would it perform on something totally different?

I uploaded a few senate confirmation hearings. Seven one-hour videos of Cory Booker’s marathon Senate filibuster. A bunch of local news shows and sports wrap ups.

And tediously had to cut everything down to an hour. My hands were tired.

But I was impressed with the results. Interesting. Today’s LLMs have so much pre-trained context, these things are like ambitious young adults that just need a little bit of coaching (read: prompting) to run fast in any genre.

No longer does “fine-tuning” a model mean massive training datasets and tedious labeling workflows. Rather, a small group of clever users can instead become quite expert at deploying prompts and workflows to ensure they’re orchestrating the precise color and shape of superintelligence that will solve their content processing needs.

This will have dramatic implications for the discipline of creating highlights across the near-infinite content categories represented by today’s linear, streaming and social media universe.

Step two. Size Matters.

In May, I switched out 4o for 4.1. Wow. Now I can load 8 or more hours of transcribed content directly into this thing and get good – if not great - outputs.

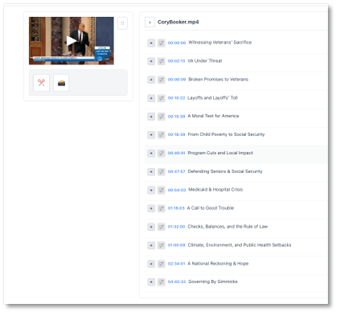

At left is a difficult-to-see image of a single seven-and-one-half hour file of Cory Booker on the Senate Floor this past Spring.

If you could see the snapshot, you’d see the model’s inability to keep the chapters evenly spaced throughout the seven hours, as per the prompt.

But the next release will surely be better, and this gives us a sneak peek into an exceptionally powerful skill: The ability to skim long videos the way we today skim a table of contents.

Today’s journalists need to pull quotes, facts and fallacies faster than ever, with less effort, from an ever-increasing volume of newsworthy content arriving daily.

The most important thing that LLMs bring to the AI table is the ability to understand context.

And the most important skill that some LLMs have – and not others - might well be the ability to crunch through massive volumes of text produced by the transcripts. The PhDs call that a “Context Window” and this dramatic increase is going to change everything about the way we watch, curate, and produce video, including summaries, condensed games, and extended, personalized highlight reels.

Old School Alchemy

I’ve also been immersed in ‘older school’ computer vision models like Face Recognition, Optical Character Recognition (OCR,) as well as Object, Landmark and Logo detection.

Not to mention the even-more-confusing Vision Language Models – a hybrid merge between language and image-based models which can produce Scene Descriptions. (The best-known open source version is called CLIP. These can be fantastic for metadata generation.)

Throughout this process, I’ve come to realize the sheer volume of possibilities for AI solutions is, quite simply, overwhelming. The complexity of the workflows, the specificity of the use cases, the time trade-offs and business decisions that come along with trying to tackle tough content problems using a combination of the tools above is enormous. Paralyzing, in fact.

Are you searching historical archives on prem? Or the past month on cloud? Does your footage have audio? Are you cutting highlights of a local high school game? Which sport? Or are you searching for a quote from a five-hour-long municipal hearing?

Spend a few minutes on Hugging Face and you’ll see there are many thousands of models attacking a range of problems under the sun with alternative approaches and vanity metrics to benchmark against.

The word that comes to mind for me is Alchemy.

I mean that in two ways. Yes, there’s a feel of magic to the discipline that, in the end, is not real. But more importantly, it’s worth noting that alchemists developed essential chemical techniques, discovered new elements and compounds, and in fact laid the groundwork for modern chemistry.

And in that sense, I have one final thought.

Two Words, One Takeaway

The best approaches going forward may well be an alchemy-like hybrid of all of these.

But my gut sense today is that the thing that will truly rule them all will be, in fact, a clever team of humans using a clever set of LLM wrappers and video workflows. Language alchemy indeed.

Comment, reach out, and sign-up for my next #FutureOfTV.Live Zoomcast here.

Brian Ring is a TV tech expert with deep experience in full-stack GTM, Product Market Fit and Revenue Growth motions. He’s worked with Amagi, Synamedia, MediaKind, Mux, Ateme and many others. His expertise includes all segments of video tech infrastructure including content creation, management, distribution, and monetization. Current consulting projects are focused on LLM-integrated media workflows; the CTV programmatic stack; the next evolution of FAST.

Brian is an active thought leader, writing and moderating panels for Streaming Media, NAB Show, Stream TV Show, Sports Video Group, and other major industry events.

He holds an MBA from The Wharton School and a BA in Psychology from UC Berkeley.

Industry Voices are opinion columns written by outside contributors — often industry experts or analysts — who are invited to the conversation by StreamTV Insider staff. They do not necessarily represent the opinions of StreamTV Insider.